A New Symphony: The Era of AI-Powered Music Creation with MusicGen

Welcome to the era of AI-powered music creation, a time where the power to create captivating songs extends beyond the echelons of trained musicians to anyone with a vision and a revolutionary tool named MusicGen. My personal journey with MusicGen took me on a fascinating voyage into the world of jazz, an experience that was nothing short of magical.

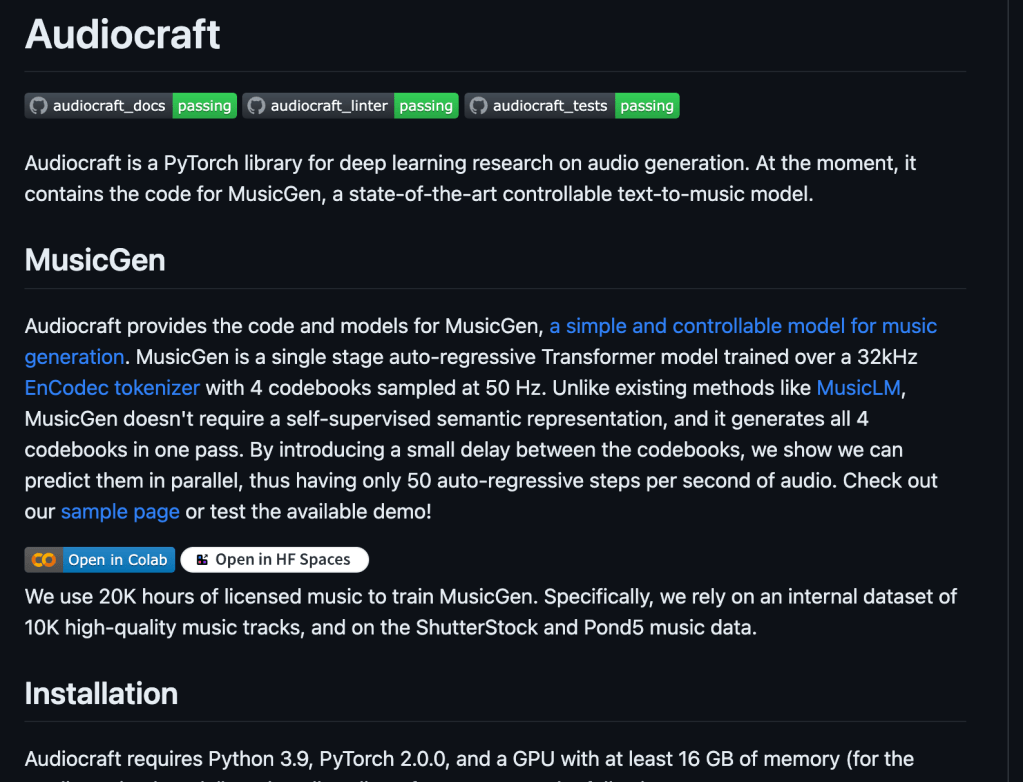

MusicGen is the brainchild of the Audiocraft team at Meta AI, a testament to their innovation and vision. It leverages advanced machine-learning techniques to convert simple textual prompts into intricate and captivating songs, acting as a conduit for the creative vision of its users. The ingenuity behind this technology is impressive, employing a single-stage auto-regressive Transformer model trained with a 32kHz EnCodec tokenizer and four 50 Hz codebooks . The model is available in three sizes: 300M, 1.5B, and 3.3B parameters, each designed to cater to different music generation needs.

But what does it mean by a “single-stage auto-regressive Transformer model”?

Auto-regressive Transformer Model: The Conductor Behind the Music

In machine learning, a Transformer model is a type of model that uses self-attention mechanisms to better understand the context of the input data. It’s particularly effective in tasks that involve sequential data, like language translation or, in this case, music generation.

The term “auto-regressive” refers to how the model makes predictions. An auto-regressive model uses previous output as input for future predictions. Imagine a conductor guiding an orchestra, where each note depends on the notes that came before it. That’s essentially what an auto-regressive Transformer model does. It’s a sequential process, with each step building on the last, allowing the model to generate complex and coherent compositions.

Harmonizing with AI: The Power of MusicGen

MusicGen is an open-source text and music model created by Facebook, with the code publicly available on GitHub for enthusiasts to experiment with. In addition to producing high-quality audio, MusicGen boasts a remarkable understanding of genres, and soon users will have the ability to train their own models, further amplifying the creative possibilities.

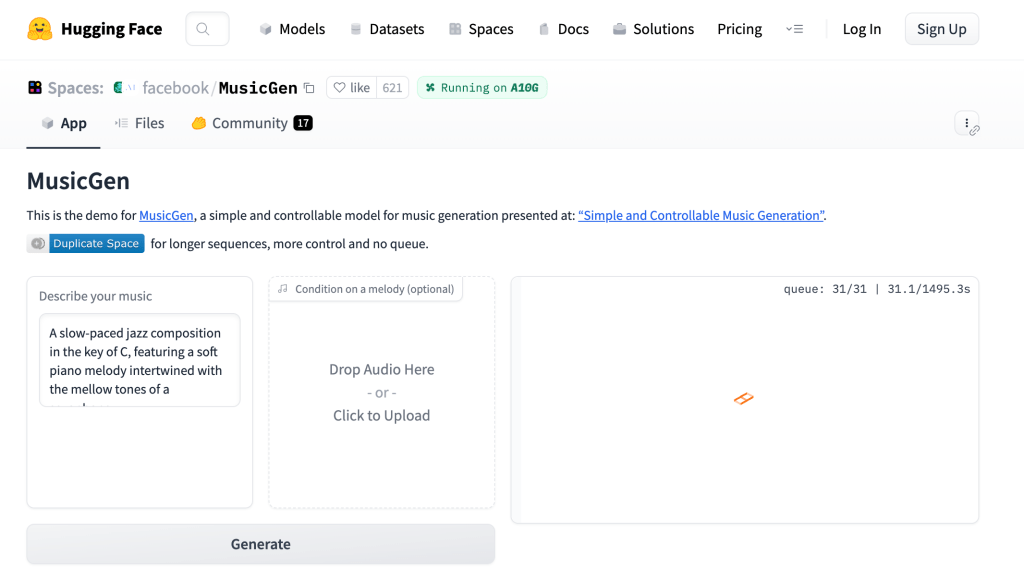

My experience with this tool was remarkable. As a jazz aficionado, I began my journey by feeding MusicGen a simple instruction: “An upbeat jazz composition in the key of C, featuring a soft piano melody intertwined with the mellow tones of a saxophone with bass”. To my delight, MusicGen composed a beautiful piece that was rich in tone, depth, and complexity. Listening to my very own jazz composition was an exhilarating experience.

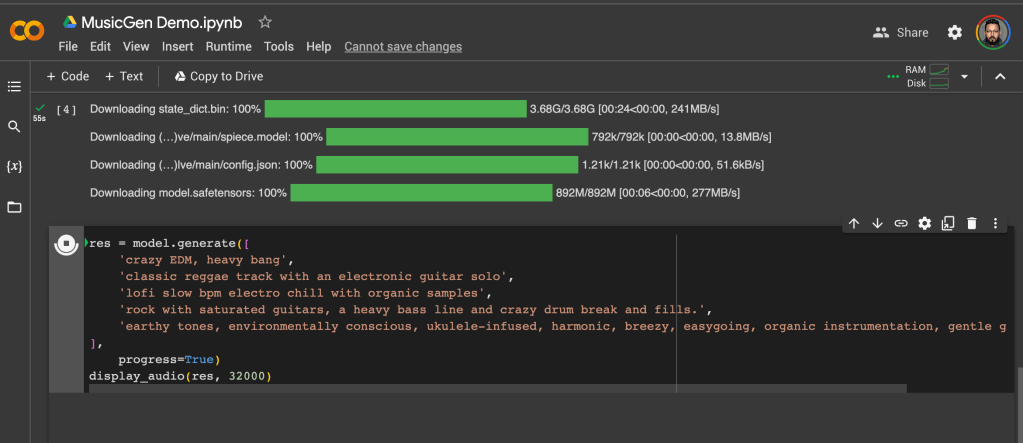

Orchestrating a New Creative Landscape: Running MusicGen

Running MusicGen is a straightforward process. Options include using Google Colab, which offers excellent performance and minimal setup, or Hugging Face spaces, which while slower, offers unlimited output. For those with capable GPUs, running this locally offers unlimited flexibility.

Fine-Tuning the Symphony: Limitations and Room for Improvement

While MusicGen opens up a world of possibilities, it does have its limitations. Currently, it does not support vocals or sound effects, and it has a maximum duration limit of 30 seconds for audio clips. Additionally, it requires a GPU with 16GB of RAM, which might be a barrier for some users. As AI and machine learning continue to evolve, we anticipate improvements in these areas.

A Rhapsody: Beyond the Boundaries of Genres

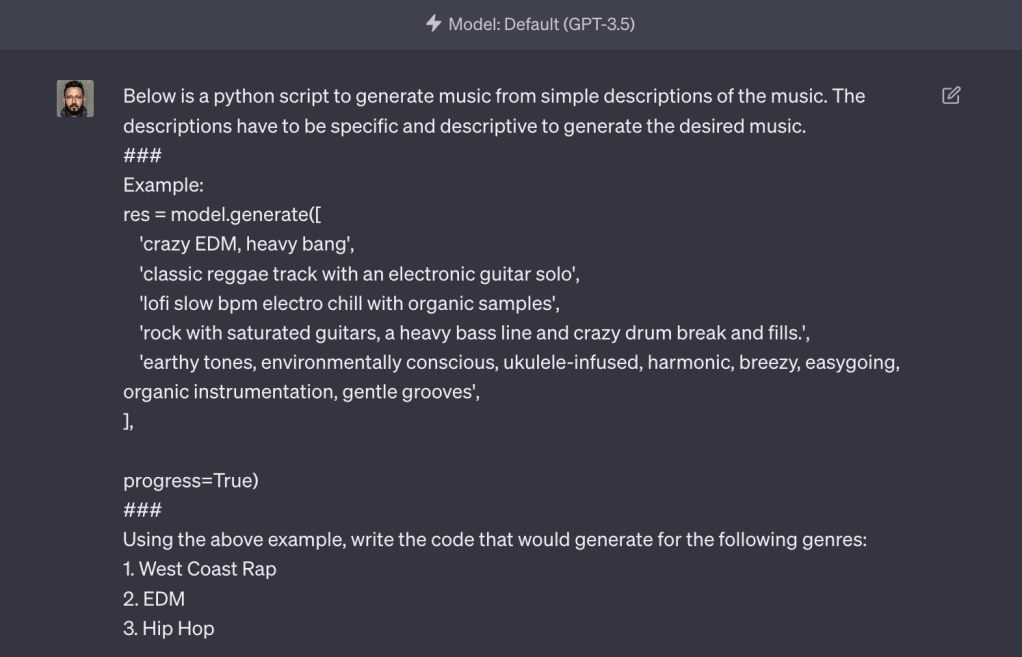

MusicGen’s capabilities extend far beyond jazz. In my experimentation, I found it could produce a variety of genres, from techno beats to reggaeton, and even different styles of rap. By simply adjusting the textual prompt, you can explore a diverse range of musical styles, from the upbeat rhythms of West Coast Rap to the gritty beats of East Coast Rap. The results are impressive, though, as mentioned, the model does struggle with vocals and sound effects.

Conducting with AI: A Hack for Better Descriptions

If you’re not musically inclined, you can employ a simple hack to generate better music descriptions for the model. Using OpenAI’s language model, GPT-3, you can provide a few examples and mention the genres you’re interested in. GPT-3 will then generate a detailed description of the music that you can use as input for MusicGen. I used this method and was pleasantly surprised by the quality of the descriptions.

A Crescendo in the World of Music: Looking Ahead

The future of MusicGen looks promising, particularly with the prospect of custom models on the horizon. Once Facebook releases the training code, users will have the opportunity to train their own models, potentially leading to outputs with vocals, sound effects, and even more specific music genres.

MusicGen’s transformative potential is undeniable. By making music creation accessible to all, it unlocks a wealth of creativity and gives birth to a new era of AI-driven music innovation. For me, MusicGen has opened a new world of possibilities in my journey with jazz. I encourage you to dive into this new era and create your own symphony with MusicGen.

Despite its current limitations, MusicGen is a ground-breaking tool that has the potential to democratize music creation. It’s an exciting time to be a part of this AI-driven revolution in music.